Mythbusting: data sharing and privacy laws

On 17 July 2025, Emily Taylor gave the keynote address at the meeting of the International Data Law Forum in Berlin, on the subject of international data transfers. This is a copy of her remarks.

At 5:30 am on a September morning in 1999, Richard Branson was awoken to be told that the BA-sponsored London Eye had a technical problem. Branson himself takes up the narrative:

“They couldn’t erect it. They had the world’s press waiting to see it going up and I knew we had a duty to give them something to look at.”

So, he did.

But the vigorous competition between Branson’s Virgin Atlantic and British Airways conceals an underlying cooperation on aircraft security. That voluntary collaboration on security is ingrained in the aircraft industry. It’s in everyone’s interest to keep planes in the air.

I’m here today to talk to you about scams and fraud, and why voluntary, cross-border, multi-sector information sharing is essential if we’re going to make progress, drawing parallels with safeguarding in health and social care contexts.

Data sharing across borders - why is it so difficult?

But data sharing across borders is not easy. Although I have a legal background I now spend most of my time now in the non-profit sector and thinking and writing about digital policy. So, I’m not going to lecture you about the law - you know much more than I do. I’m going to talk about the practical impact of a clash of law and culture between the EU and US, and give you an example of how cross-border data sharing *can* work when organisations commit to cooperation on security and crime-prevention, while continuing to compete fiercely on all the other stuff.

Twenty five years on from the millennium wheel, the protection of people against scams and fraud - not just the financial harm, but the emotional impact, which is severe - is moving up the political agenda internationally. We are not doing well as societies. If cybercrime were a country, it would have one of the largest economies in the world; and the first rule of cybercrime is - no one goes to jail.

Meanwhile, policing is still organised intensely locally - just as it was back in the 1970s – so it’s structurally unsuited to tackle the transnational nature of cybercrime. The UK estimates that fraud now represents 40% of crime in the UK, and 80% of that is cybercrime. Structures for international cooperation on cybercrime – such as the notoriously complex and slow Mutual Legal Assistance Treaty – are out of step with the scale and speed of cybercrime.

Even if the police did sort out how to cooperate internationally at cyber-speed, there is still an enormous capacity gap. This is why the involvement of the private sector - better resourced, more technically capable - is essential if we are to make progress.

The fraud attack chain

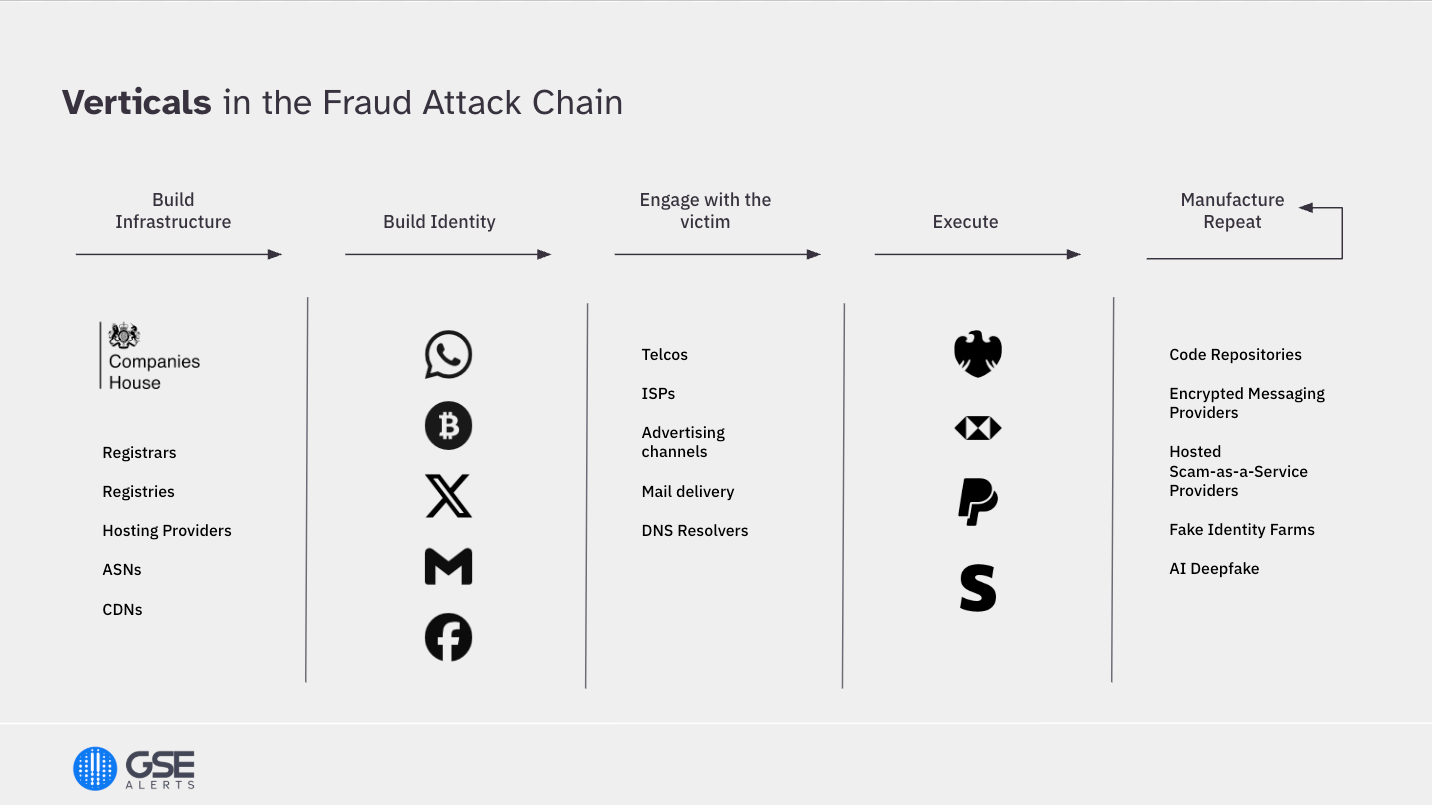

In today’s world, the journey of a scam not only crosses international borders, but also numerous services, as cybercriminals build infrastructure, establish a false identity, engage with the victim, and finally execute the fraud through a payment, before repeating the pattern - in a matter of minutes or hours.

Until recently, there might have been some limited information sharing between organisations in some of these industry verticals, or at a national level, but there has been no solution that provides for international, cross-industry, multi-directional signal sharing. So, people know what they know, but they don’t see the complete picture - even the biggest players tell us this.

Until recently, transatlantic industry dialogue about data sharing resembled a circular firing squad, with the actors focused on blaming one another rather than cooperating to address the problem.

Operating in only in siloes and failing to pool the snippets of information that the relevant people know can have tragic consequences, as the next example shows.

Safeguarding and scam-fighting - the parallels

This is Victoria Climbié. Her death from 128 separate injuries was described by the pathologist who examined her as the worst case of child abuse she had ever seen. Victoria had been on the radar of the police, social services and two hospitals, paediatricians, housing departments, churches, and a national child protection charity. All had contact with her, noting signs of abuse, but did not take action.

Victoria’s aunt and her aunt’s boyfriend were convicted for her murder, but the many reports and enquiries that followed highlighted a systemic failure that had it been addressed, might have saved Victoria. The failure was a lack of information sharing. The incident led to multiple changes in the UK, and the development of systemic safeguarding structures, which have at their heart the sharing of information.

You might question whether it’s appropriate to compare the death by abuse of a child, and the case of scams and fraud. I say that the comparison is valid for several reasons. First, multiple studies - including one that we conducted – show that the emotional harms caused by scams and fraud are severe. When victims report scams and fraud to authorities, 47% report that they were made to feel ‘stupid or embarrassed’. The researcher Paul Raffile has been tracking the deaths by suicide of many teenage boys across the US and Europe as a result of online sextortion.

Less emotionally charged, but important, policy lessons from the comparison with safeguarding are the need to shift the burden of defending themselves away from victims, to others in society who are better placed to help. Finally on this topic, the safeguarding analogy transforms the idea of information sharing from a nice-to-have to something that is the key to making a difference.

Sharing information can transform outcomes, whether in the care context or in fighting cybercrime. Yet - as Lord Laming points out - is often impeded by mistaken interpretations of privacy laws.

“Despite the fact that the government gave clear guidance on information sharing… there continues to be a real concern across all sectors … about the risk of breaching confidentiality or data protection law by sharing concerns about a child’s safety. Whilst the law seeks to preserve individuals’ privacy and confidentiality, it should not be used (and was never intended) as a barrier to appropriate information sharing between professionals.”

(Lord Laming, 2008, The Protection of Children in England).

Transatlantic data flows - an area of legal movement

The difficulties surrounding transatlantic data flows formed the basis of a special issue of the Chatham House Journal of Cyber Policy, of which I am the former editor.

Adoption of the internet across the globe has revolutionised the ways that people access information, live their lives, run businesses, learn and socialise. Inevitably, such a seismic disruption also surfaced complex issues that are difficult to solve. Over the past decade, reactions to the Snowden revelations and cautious interpretations of the EU's General Data Protection Regulation have undermined confidence in transatlantic data sharing for law enforcement and national security purposes. Is it too much of a leap of imagination to assign - at least, in part - the growth in cybercrime we looked at earlier to the difficulties in cross-border data sharing?

WHOIS and privacy - feeling the pain

I have experienced these strains first hand in the ICANN environment, in debates about the publication of domain name registration data for more than 20 years. I’m referring to a service known as the WHOIS, a free directory that anyone could use to look up registration details of domain name holders. The WHOIS response was instant, and free of charge.

As a policy debate, WHOIS reflects competing, legitimate interests of public safety and individual privacy. When the ICANN WHOIS published everything, it naturally accrued complaints from civil society groups and open letters from the European Article 29 group.

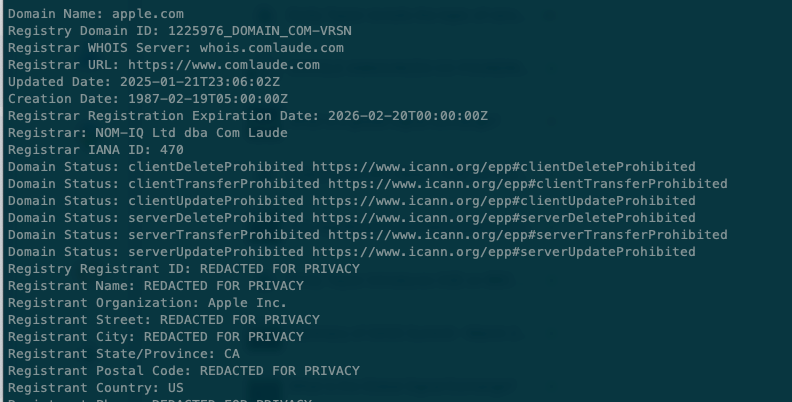

Everything changed in May 2018 with the arrival of GDPR. The ICANN community panicked and redacted registrant data from the public WHOIS - even when it referred to organisations.

That’s me back in 2018, as part of an ‘expedited’ policy development process’ to respond to the impact of GDPR on WHOIS. But after a multi-year process, we failed. Registration data, even relating to companies such as Apple here, has been ‘redacted for privacy’.

Since when did massive corporations enjoy privacy protections designed for human beings?

And why such a cautious implementation of privacy laws?

Cultural differences between the EU and US result in divergent approaches, and this comes into play due to GDPR’s extraterritorial effect.

Mythbusting GDPR

Award-winning legal powerhouse, Eleanor Duhs, tells me that a guy was seen recently at a legal conference, wearing a T-shirt saying ‘Only God is GDPR compliant’. This highlights a key aspect of the EU law - it is aspirational in nature, an anathema to the more litigious US environment, where a strict, black letter law interpretation is applied. When faced with turnover based fines imposed by GDPR, it’s difficult to persuade US companies to just ‘be a good sport’, and voluntarily shoulder the potential liability by sharing data.

Before going on, let’s bust some myths about privacy law:

- First of all, in a healthy democracy, the protection of fundamental rights is essential, especially in contexts where someone can be deprived of their liberty. Privacy laws set bright lines for the law enforcement community when fighting crime, and that is something to be respected and cherished.

- You all know this, but it’s worth stating anyway. Privacy laws and criminal justice are not in opposition to one another, and the recent guidance of the UK’s Information Commissioner is that privacy laws do not prevent you for sharing personal information where it is appropriate to do so, or from taking steps to prevent harm. This statement shouldn’t really have needed to be made, but is welcome nonetheless.

Data sharing is an area of movement in the legal world, towards a more permissive environment - the OECD declaration, the second additional protocol to the Budapest Convention, the EU e-Evidence framework, and even the UN Cybercrime convention have all been finalised in recent years. All aim to cut red tape for international data transfers, while providing appropriate safeguards for human rights.

At the same time, there are interesting developments in UK policing’s approach to the transnational nature of cybercrime. The UK’s National Crime Agency is developing bilateral cooperation with India and other countries that are not Budapest convention members, but are regional centres of scams and fraud. Last week, the NCA announced that as a result of this cooperation, Indian police raided a call centre and arrested two individuals suspected of defrauding more than 100 victims in the UK.

The Global Signal Exchange - an industry-led approach to fighting scams and fraud

Voluntary, industry-led approaches illustrate that it is feasible to share threat signals, in a rights-respecting framework, to assist the fight against scams and fraud.

- This is where the Global Signal Exchange comes in. A non-profit, global clearinghouse for the real time sharing of scam and fraud signals. It was established in partnership between the Global Anti-Scam Alliance, Google and my organisation - Oxford Information Labs. We provide the technical system.

Here are a few points on how we protect privacy in the GSE:

- As a data sharer, you are in control of what happens to your data (protected pathways for 1:1 sharing; freely available; resale options for commercial feed providers)

- All users are verified and vetted

- We’re exchanging information that’s not sensitive from a PII point of view - domains, URLs, IP addresses. These have several advantages - because they are universal identifiers, everyone can handle the data items, and they are immensely useful as signals of potential threat.

- There is no compulsion for any actor to take down materials, or take down the dodgy domains. It’s a voluntary system. Yet, early reports from users are extremely encouraging.

- Accounts are segregated, and the system has high levels of cybersecurity protection

- Our approach to the data we collect and process is closely mapped to the GDPR principles, including data minimisation.

- Feedback loop - and its role in keeping the data accurate and up to date

Since opening our doors in January, the number of signals in the GSE has grown from 40 million to more than 300 million today. We have more than 160 organisations onboarded or in the pipeline, and are conducting pilots with several industry sectors and public sector partners. In a 1:1 exchange of signals from Google to Meta, Meta reported that the information was near 100% accurate and actionable, and new to Meta. They used the information to enhance the training of their AI/ML detection systems. Finally, Google reports between a 10-60% uplift in new information from the open signals in the GSE.

A complex picture, but signs of hope

In closing, privacy laws are not an annoyance, but something that need to be baked into the design of a system from the outset, guiding the approaches to data collection, processing and storage, and baking in safeguards. The digitisation of societies, and with it, the digitisation of crime has presented complex challenges both for protection of individual privacy, and for international policing. There are lessons to be learned from other sectors - such as cooperation on security amidst fierce competition between players in the airline industry, and the emergence of safeguarding as a discipline in health and social care, founded on the principle of sharing concerns - even if they are not well founded.

I’m here to tell you that there are signs of hope:

- Energetic policy thinking on how to free up transatlantic data flows, while also providing robust safeguards for privacy, and updates to multiple international agreements.

- At the private sector level, we’re also seeing a change of approach. Top management commitment to finding lawful, rights respecting ways to share information between public and private sector partners, internationally.

- The Global Signal Exchange approaches the issue of signal sharing by starting with the GDPR as the governing framework, keeping the data types simple and relatively low risk. Yes, it’s challenging to do the technical system, and negotiate multiple data sharing agreements with large multinational organizations, but there is also incredible momentum and support from those partners to create positive change and impact in the fights against scams and fraud.